I’m Visually Impaired. Apple Vision Pro Is An Amazing Assistive Device.

I’m albino. My eyes are … different.

In many ways, the world is not built for me. Every time I open Outlook on my iPhone and FaceID pops up, I have to pull the phone far away from its usual position — an inch and a half from my face — because the LIDAR sensors on the front that identify me were not designed to work at a distance that makes sense for my eyes.

My Photos app is mostly filled with pictures I’ve taken of menus at fast-food restaurants, or the occasional row of beer taps at a bar — I have no chance at knowing what’s there unless I can zoom in.

I can’t legally drive without crazy-expensive bioptic telescopes that take months of training. I stopped biking to work after realizing that the spatial awareness needed to not get mowed down by lawless SF drivers was not something my depth-perception-less eyes could pull off.

For the most part, this didn’t cause too much of an issue for me in med school. As it turns out, most of the tasks that you need eyes for are amenable to assistive devices — for example, an iPhone-connected digital otoscope — that I could take along with me when I needed them. I could always do what I had to do.

But now I’m in grad school, working on a data science–heavy PhD, and I’m face-to-face with a nemesis that only lightly taunted me before: my computer.

Oh! — the fits of jealousy I have let out, watching people casually use their laptops at a reasonable, comfortable reading distance. If ever I am away from a 24-inch-plus monitor, my only hopes for being able to see my screen are to (A) contort myself so my face embedded in the display, (B) deal with the puny field of view of loupes, or (C) crank up the full-screen zoom feature built into macOS — a godsend, yes, but also a bit awkward when I have to blow up my Slack messages to 80 point font in the office to read them.

Yikes. Been burned by that one.

And so, I pre-ordered Apple Vision Pro, even on my meager grad student stipend.

Now, I’m not a newbie to working in a VR workstation; I spent my tech years doing computational work inside an Oculus Rift, despite the screen-door effect and the stream of ginger chews for the nausea. But Ooh! — the power of being surrounded on all sides by Jupyter notebooks!

The Vision Pro, though, is something to behold. If you want to know how good it is, just watch Apple’s ads. Or Minority Report. It really does just work as advertised. The inside-out tracking is so good that I can open a web browser in my office on the 6th floor of the Weill building, walk down the stairs to the kitchen on the 5th floor and check my email, and then walk back up the stairs to the 6th floor to find the browser exactly where I left it. It’s bananas.

But for me, the most amazing part is the attention to detail Apple has given to differences in vision.

Wear prescription glasses? Click in a pair of magnetic lens inserts from Zeiss — the headset registers your prescription when they’re attached and uses it to correct screen rendering and eye tracking artifacts.

Have strabismus? No problem: the Accessibility menu lets you turn off foveated rendering (tough to do right for eyes that don’t come together) and switch the eye-tracked navigation to your dominant eye (or even to hand gestures only).

Even my nystagmus and my lack of retinal melanin to absorb IR backscattering — both of which have befuddled research-grade eye tracking systems in the past — pose no issues for the Vision Pro, as long as I blow up the user interface a little bigger to account for the eye wiggles.

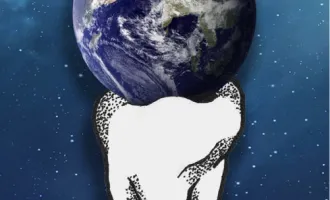

Which — I mean, why not? When your monitor is space, it’s not like you’re going to run out of room.

You may yuk it up as you’re taking candids of me out working on the quad. (Yes, I can see you.) But as a disabled person, the ability to finally sit back with my feet up on a bench out in the sun while working on my laptop — or more accurately, while working on a 30-foot-wide 4K screen floating in exactly the perfect ergonomic position, one that I can reposition anywhere I want it to be in any moment — is the answer to decades of prayers to the accessibility gods.

Can’t read my text messages? Why don’t I just pull them right next to my face?

Not enough room on my 13-inch laptop screen for the four code windows I’m looking at to be visible at the 20 point font I need to read? What if I just made the screen 15 feet tall?

For me, the extra bit of weight from strapping an iPad to my face is worth it. Because now I get to feel, for the first time, like I can use a computer the way I want to — and not the way I have to, because it’s designed for other people’s eyes.

So, if you see me with my Vision Pro on, don’t mind me — I’m just over here living in the 24th century like Geordi La Forge.